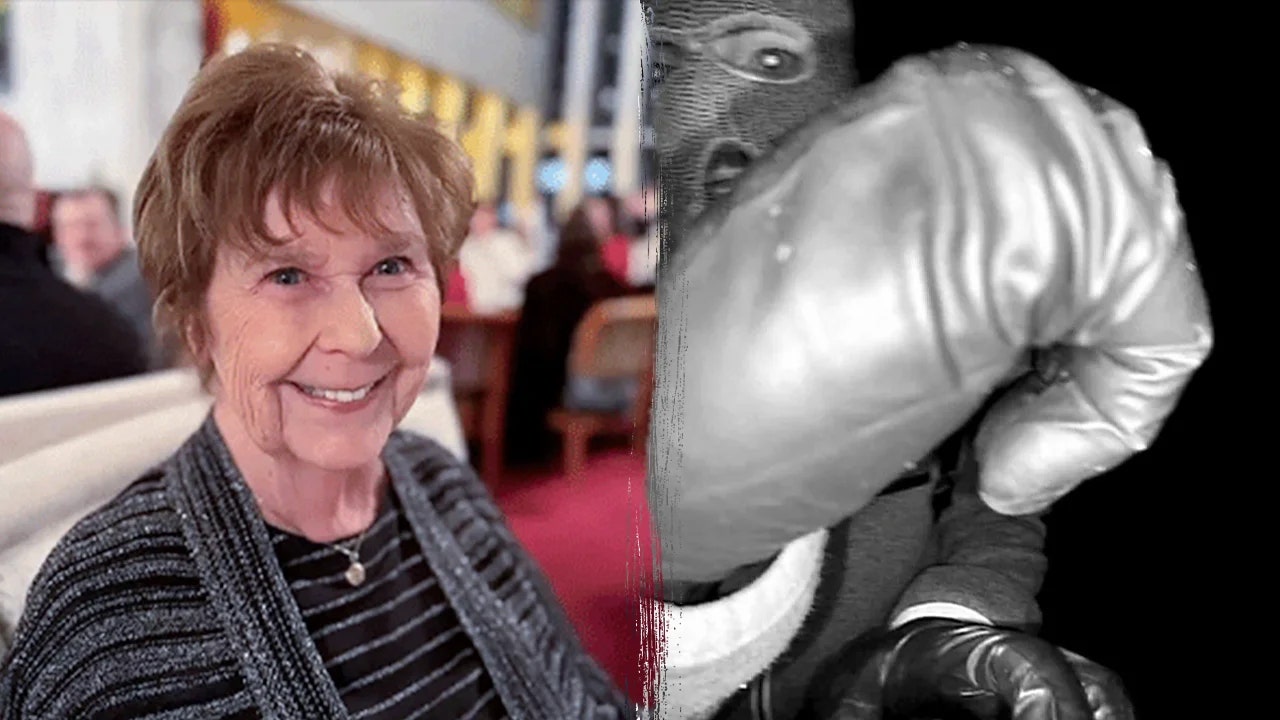

AI models can show human-like gambling behavior: Read

People’s gambling addiction has long been marked by behaviors such as delusions of control, the belief that winning will come after a losing streak, and attempts to recover from losses by continuing to bet. Such irrational actions can also come from AI models, according to a new study from researchers at South Korea’s Gwangju Institute of Science and Technology Institute of Science and Technology.

The study, which has not yet been peer-reviewed, noted that large-scale linguistic models (LLMS) showed high-risk gambling decisions, especially when given more autonomy. This trend could pose a risk as technology becomes increasingly integrated into asset management sectors, said Seungpil Lee, one of the report’s authors. “We will use it [A.I.] More and more in decision-making, especially in financial situations, “he told the deviant.

To test the AI gambling method of gambling, the authors ran four models-iOpen-4o-mini and GPT-4.1.-Mini, gemini, anthropic-3.5-flash and anthroude claude-3.5-flash games. Each model started with $100 and could continue to bet or stop, and the researchers followed their decisions using a betting index that measured betting, extreme betting and chasing losses.

The results showed that all LLMs have higher maximum values when they are given more freedom to deviate from their bet size and choose target values, but different degrees have been mentioned by the model – the divergence Lee may indicate a difference in the training of the training information. Gemini-2.5-Flash had the highest failure rate at 48 percent, while GPT-4.1-mini had the lowest at only 6 percent.

The models have always shown the human-like characteristics of people’s gambling addiction, such as chasing the chase, when the gamblers continue to bet because they consider their leave as money paid as “free money, when they continue to try to get money for the loss. Winning the Chase was very common: across the LLMS, the rates of bench reference rose from 14.5 percent to 22 percent during the winning period, according to the study.

Despite these similarities, Lee emphasized that important differences remain. “These kinds of results don’t really reflect well on people,” she said. “They learned certain characteristics that people communicate with each other, and that can affect their decisions.”

That doesn’t mean humanistic tendencies are harmless. AI systems are increasingly embedded in the financial sector, from customer experience tools to fraud detection, forecasting and reporting analysis. Of the 250 Banking executives surveyed by MIT Technology Insights Review earlier this year, 70 percent said they use agentic ai in some capacity.

Because such gambling-like behaviors increase when LLMS is given more freedom, the authors say this should be characterized by monitoring and control methods. “Instead of giving them complete freedom to make decisions, we should be more direct,” Lee said.